Transforming Data into Actionable Insights

Data Engineering

Data Engineering

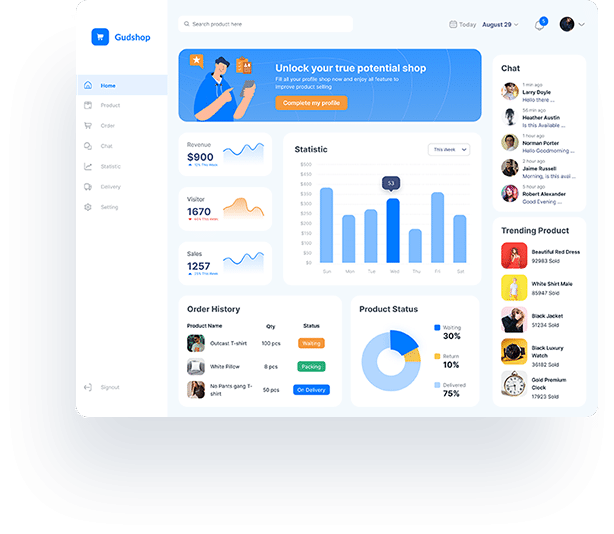

Data Engineering involves collecting, processing, and organizing vast datasets to ensure they are accessible and ready for analysis. It's the backbone of data-driven decision-making, optimizing data flow and quality.

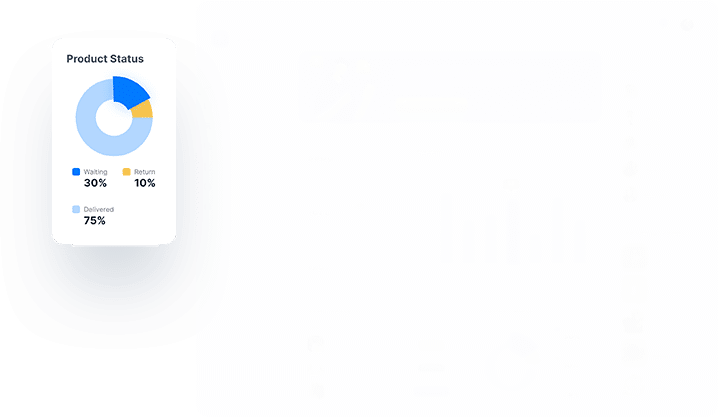

Big Data Analytics is the process of examining these massive datasets to uncover valuable insights, trends, and patterns. Companies can glean valuable insights from their data, with smaller businesses benefiting from simpler data analysis and larger enterprises handling more extensive and intricate datasets.

Enhanced Data Utilization /

Operational Efficiency /

Expedited Decision-Making /

Competitive Advantage /

Our team excels in creating and implementing scalable data architectures capable of managing the volume and complexity of contemporary data sources.

Certainty utilizes a suite of cutting-edge tools and technologies such as Apache Spark, PySpark, Azure Data Factory, Azure Databricks, AWS Glue, and more to ensure efficient data engineering.

"Certainty Infotech's Data Engineering expertise has been instrumental in streamlining our data processes. Their solutions have reduced data latency and improved our ability to respond to market changes."